Key Points

- Data pipelines connect, transform data sources to data targets in batches or event streams

- The interactive set of data stream services that compares to batch Hadoop services

- supports many data sources including messaging services ( Kafka, MQ etc )

References

Key Concepts

Spark

Spark powers a stack of libraries including SQL and DataFrames, MLlib for machine learning, GraphX, and Spark Streaming. You can combine these libraries seamlessly in the same application.

Learning Apache Spark is easy whether you come from a Java, Scala, Python, R, or SQL background:

df = spark.read.json("logs.json")

df.where("age > 21") .

select("name.first").show()Read JSON files with automatic schema inference

Spark Quick Start

This tutorial provides a quick introduction to using Spark. We will first introduce the API through Spark’s interactive shell (in Python or Scala), then show how to write applications in Java, Scala, and Python.

To follow along with this guide, first, download a packaged release of Spark from the Spark website. Since we won’t be using HDFS, you can download a package for any version of Hadoop.

Spark SQL Guide

Spark in Action book

https://drive.google.com/open?id=1ZqKpzFcCgy3r4lyeuN2t2gINpegHIte9

Combine Spark with Airflow, Koalas to get pandas

Bryan Cafferky on Spark

Arrow creates optimized memory stores for data in Spark from any language client

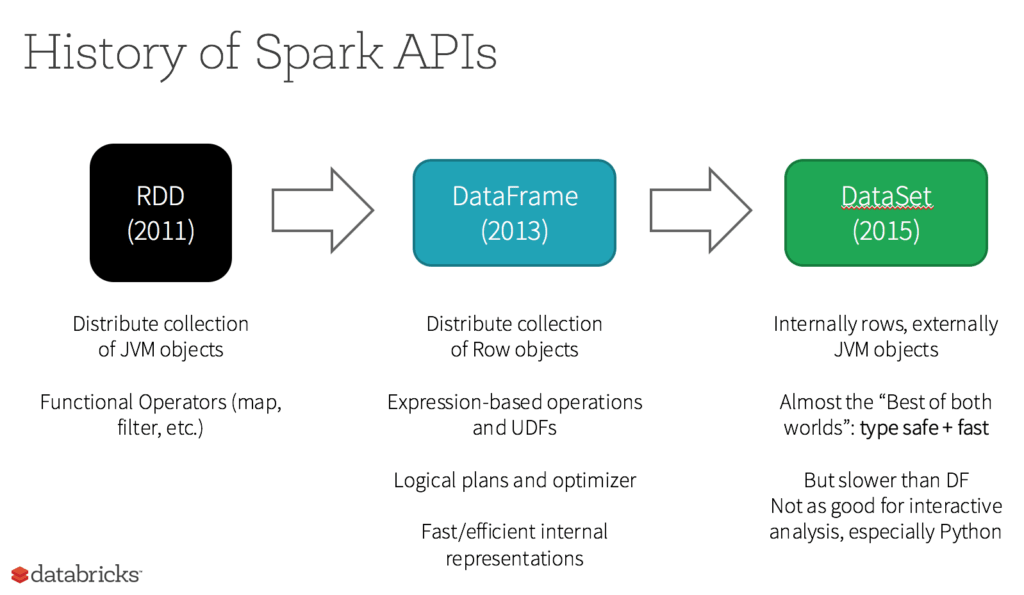

RDD or Resilient Distributed Dataset

An RDD or Resilient Distributed Dataset is the actual fundamental data Structure of Apache Spark. These are immutable (Read-only) collections of objects of varying types, which computes on the different nodes of a given cluster

https://databricks.com/glossary/what-is-rdd

RDD was the primary user-facing API in Spark since its inception. At the core, an RDD is an immutable distributed collection of elements of your data, partitioned across nodes in your cluster that can be operated in parallel with a low-level API that offers transformations and actions.

5 Reasons on When to use RDDs

- You want low-level transformation and actions and control on your dataset;

- Your data is unstructured, such as media streams or streams of text;

- You want to manipulate your data with functional programming constructs than domain specific expressions;

- You don’t care about imposing a schema, such as columnar format while processing or accessing data attributes by name or column; and

- You can forgo some optimization and performance benefits available with DataFrames and Datasets for structured and semi-structured data.

Create data frame from a table with Spark SQL

Plot it

Each language has a separate shell ( R, PySpark etc )

Spark SQL

Potential Value Opportunities

Potential Challenges

Candidate Solutions

Step-by-step guide for Example

sample code block