Identity Management security concepts

Key Points

- proprietary and open-source solutions for apps and services

- TOIP - interoperable identities, wallets etc

References

Key Concepts

Identity Management

ToIP - Trust Over IP Identity Management standards

interactive trust model << key demo

https://trustoverip.org/wp-content/toip-model/

The ToIP Foundation is the only project defining a full stack for digital trust infrastructure that includes both technical interoperability of blockchains, digital wallets and digital credentials AND policy interoperability of the governance frameworks needed for these solutions to meet the business, legal and social requirements of different jurisdictions and industries around the world.

The ToIP stack will reference open standards for specific components at specific layers, such as the W3C standards for Verifiable Credentials and Decentralized Identifiers (DIDs). It will also reference ToIP stack components being defined by working groups at DIF, Hyperledger, the W3C Credentials Community Group, and other open source and open standard projects worldwide.

Layers of the TOIP solution stack

Article - TOIP foundation - Vipin Bharathan

https://www.forbes.com/sites/vipinbharathan/2020/05/09/trust-is-foundational/#4e9a44a4a61e

Digital Trust problems

the basic protocol of the internet, IP or the internet protocol does not have identity built into it. That is fine, since the way most of us interact with the internet is over the technical and governance stack of layers of protocols. However, a rock-solid trust framework does not figure in any of the layers.

Without basic trust protocols, we use intermediaries for digital trust: a corporate Web site, Facebook, Google, Microsoft, IBM and many others.

TOIP to the Rescue

TOIP wants to remove the intermediary and put the user or the holder on a better footing than today. The basic interaction for trust involves a three-legged, or three party interaction

Credentials have 3 key parties - holder, verifier, issuer

The three interacting parties are the smallest unit of every trust relationship. They deal in credentials, like drivers licenses, passports, university degrees, professional accreditations, birth certificates, business licenses. The holder of the credential is given the credential by the issuer, the holder presents the credential to the verifier. The holder needs the verifier to trust them, because the issuer has vetted them and issued a credential

Governance layer for a trust domain ( or realm )

Provides the rules and monitoring to ensure the 3 parties follow defined guidelines in creating identities, issuing credentials and verifying credentials for a given domain. Using blockchain, smart contracts, this governance may be decentralized in a peer to peer model eliminating the need for centralized trust services in some instances.

Certificate Management and Encryption Solutions

Key management

Key rotation design

OpenSSL

https://docs.openssl.org/3.4/man7/ossl-guide-introduction/

https://docs.openssl.org/master/

https://docs.openssl.org/3.4/man7/ossl-guide-migration/

https://github.com/openssl/openssl/

https://docs.openssl.org/master/man7/fips_module/

The OpenSSL toolkit includes:

libssl an implementation of all TLS protocol versions up to TLSv1.3 (RFC 8446), DTLS protocol versions up to DTLSv1.2 (RFC 6347) and the QUIC (currently client side only) version 1 protocol (RFC 9000).

libcrypto a full-strength general purpose cryptographic library. It constitutes the basis of the TLS implementation, but can also be used independently.

openssl the OpenSSL command line tool, a swiss army knife for cryptographic tasks, testing and analyzing. It can be used for

- creation of key parameters

- creation of X.509 certificates, CSRs and CRLs

- calculation of message digests

- encryption and decryption

- SSL/TLS/DTLS and client and server tests

- QUIC client tests

- handling of S/MIME signed or encrypted mail

- and more...

- OpenSSLUI:This is a well-known open-source project on SourceForge that provides a GUI for many OpenSSL functionalities, which can be integrated into a web application with some development effort.

- Custom Development:

- Node.js with OpenSSL bindings: Use a Node.js backend with a library like "node-openssl" to directly call OpenSSL functions and build a web interface.

- Python with cryptography library: Leverage the Python "cryptography" library (which is built on top of OpenSSL) to create a web-based interface for encryption/decryption tasks.

Bouncy Castle Encryption libraries for Java, C++

The "Legion of the Bouncy Castle" is an Australian charity that manages and maintains the open-source "Bouncy Castle" cryptographic APIs, a set of libraries used for encryption and other cryptographic functions in software development, primarily for Java and C# platforms; essentially, it's a non-profit organization dedicated to the ongoing development and support of the Bouncy Castle cryptography libraries

Certbot - generates server certificates using Lets Encrypt - eos

If you're looking for a simple certificate management utility, "Certbot" is a widely recommended option, as it's open-source, user-friendly, and automatically generates Let's Encrypt certificates for your website, making it easy to set up HTTPS with minimal effort; for more advanced features and management across multiple certificates, consider tools like "DigiCert CertCentral" or "Sectigo Certificate Manager" depending on your needs.

- Certbot:

- Best for simple website certificate management.

- Automatically acquires and renews Let's Encrypt certificates.

- Open-source and widely supported.

- DigiCert CertCentral:

- Offers a user-friendly interface to manage multiple DigiCert certificates.

- Provides features for automation, renewal reminders, and lifecycle management.

- Sectigo Certificate Manager:

- Comprehensive solution for managing certificates from various Certificate Authorities (CAs).

- Advanced features for discovery, deployment, and monitoring of certificates across your infrastructure.

Use of Nonce, Salt, IV in cryptography

- When logging into a website, the server generates a unique nonce and sends it to the client, which then includes the nonce in their login credentials when sending them back to the server. This prevents an attacker from capturing the login information and replaying it later because the nonce is only valid once.

- When a user registers on a website, a random salt is generated and stored alongside their hashed password. This means that even if two users choose the same password, their hashed versions will be different due to the unique salt added to each.

- Usage:A nonce is primarily used in communication protocols to verify the freshness of a message, while a salt is primarily used in password hashing to prevent dictionary attacks.

- Reusability:A nonce can only be used once, while a salt can be reused for multiple users as long as it is unique for each user.

- When you need to ensure a message is not replayed, such as in authentication protocols or when sending sensitive data.

- When storing passwords to prevent attackers from easily cracking them using precomputed tables

Salts, Nonces, IVs article what's the difference - medium 2020

security-Salt, Nonces and IVs.. What’s the difference_ _ by Frida Casas _ Medium.pdf. link

Then there is so much more in other patterns to consider

Authentication Management

identity-vendor-market-guide-2023-Gartner Reprint.pdf. link

identity-vendor-market-guide-2023-Gartner Reprint.pdf. file

Key Findings

- Buyers’ needs are evolving beyond traditional know your customer (KYC) use

cases. Identity verification is now being deployed more broadly in marketplace,

workforce and security-focused use cases, which is increasing demand for

identity verification solutions. - The market is entering a transition period as portable digital identity solutions are

starting to mature, which in the next five years will reduce the demand for identity

verification solutions. - Competition in the market is fierce, with a large number of vendors offering what

appear to be similar core identity verification processes. This is causing

confusion among buyers, and forcing vendors to offer additional features such

as enhanced fraud detection and low-code integrations in order to differentiate. - Concern is growing among organizations about whether AI-enabled attacks using

deepfakes will undermine the integrity of the identity verification process or in a

worst case scenario, render it worthless.

Recommendations

- For security and risk management leaders responsible for identity and access

management and fraud detection: - Maximize the value of your investment when using an identity verification

solution by exploring if multiple use cases exist within your organization where

the technology can improve security and/or user experience (UX) across both

workforce and customer interfaces. - Prepare for a future built on portable digital identity by assessing identity

verification vendors’ strategies to remain relevant in the face of market changes,

as well as monitoring emerging technologies and testing them where they may

meet a business need. - Differentiate between vendors by focusing on features outside of the core

identity verification process, such as low-code implementation, enhanced fraud

detection, and connectivity to data affirmation sources such as identity graphs

and government issuing authorities. - Seek assurance from vendors on resistance to deepfake attacks by requesting

insights on their current experience and awareness of the challenge, detection

capabilities already in place, and evidence of investment in a forward-looking

roadmap to stay abreast of the challenges that deepfakes pose. Be suspicious of

any vendor not proactively providing you with information about the threat that

deepfakes pose and how the vendor addresses it.

Identity Proofing

https://www.nist.gov/system/files/nstic-strength-identity-proofing-discussion-draft.pdf

Identity proofing is used to establish the uniqueness and validity of an individual’s identity to facilitate the provision of an entitlement or service, and may rely upon various factors such as identity documents, biographic information, biometric information, and knowledge of personally-relevant information or events.

can provide assurances that individuals are appropriately proofed before they are bound to the credential that they use to access an online transaction.

Existing Solutions

In person identity proofing

Identity proofing to support the issuance of physical credentials, such as drivers’ licenses and passports, is an established field that is typically based on an in-person registration event where “breeder documents” are: Presented, verified as authentic, and were legitimately issued to the person claiming the documents belong to them

Remote identity proofing

validating and verifying presented data against one or more corroborating authoritative sources of data. The amount and types of confirmed data influences the performance of remote proofing systems and commonly includes attributes that can uniquely resolve a person in a given population, such as name, address, date of birth, and place of birth, as well data considered private such as account numbers or the amount of a loan or tax payment. Some instantiations of remote proofing also include a virtual session where a user may digitally present documents for verification

Verification of presented identity information

US Real ID

Access Management ( RBAC )

Potential Value Opportunities

Potential Challenges

m Public Sector Sessions 1 - Poll-Should US require a national identity to vote ?

Candidate Solutions

Apache Syncope - IAM

https://syncope.apache.org/iam-scenario

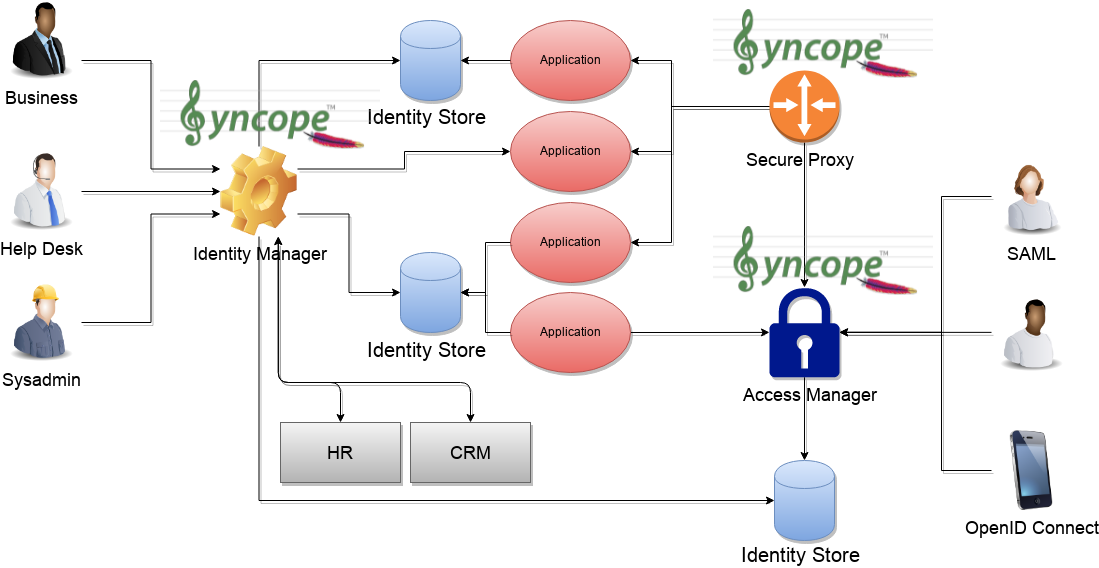

The picture above shows the tecnologies involved in a complete IAM solution:

- Identity Store

(as RDBMS, LDAP, Active Directory, meta- and virtual-directories), the repository for account data - Provisioning Engine

synchronizes account data across identity stores and a broad range of data formats, models, meanings and purposes - Access Manager

access mediator to all applications, focused on application front-end, taking care of authentication (Single Sign-On), authorization (OAuth, XACML) and federation (SAML, OpenID Connect).

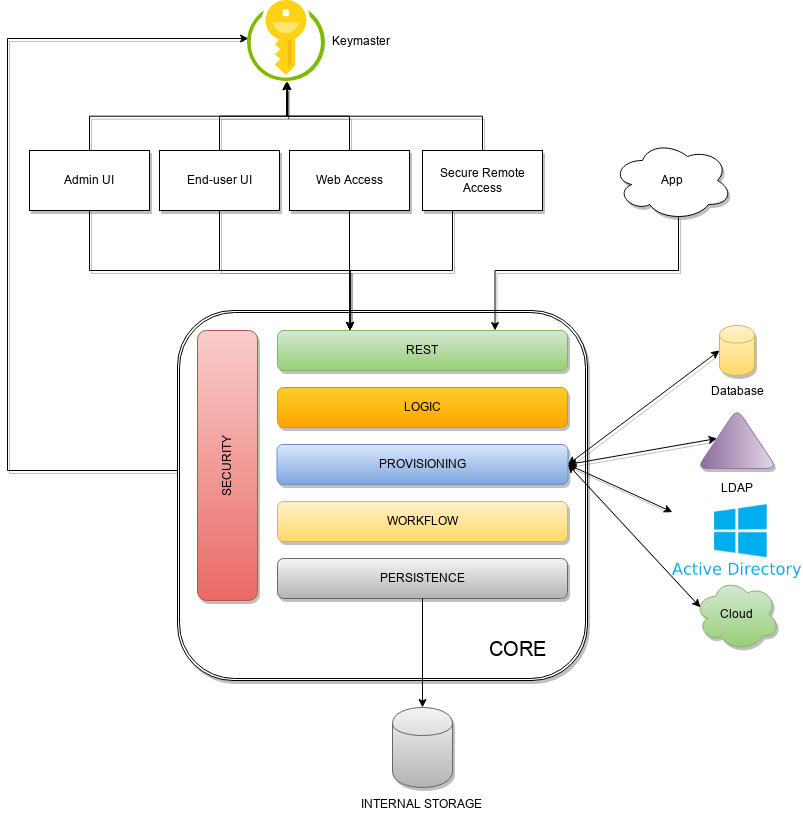

Architecture

https://syncope.apache.org/architecture

Admin UI is the web-based console for configuring and administering running deployments, with full support for delegated administration.

End-user UI is the web-based application for self-registration, self-service and password reset

CLI is the command-line application for interacting with Apache Syncope from scripts, particularly useful for system administrators.

Core is the central component, providing all services offered by Apache Syncope.

It exposes a fully-compliant JAX-RS 2.0 RESTful interface which enables third-party applications, written in any programming language, to consume IdM services.

Logic implements the overall business logic that can be triggered via REST services, and controls some additional features (notifications, reports and audit over all)

Provisioning is involved with managing the internal (via workflow) and external (via specific connectors) representation of users, groups and any objects.

This component often needs to be tailored to meet the requirements of a specific deployment, as it is the crucial decision point for defining and enforcing the consistency and transformations between internal and external data. The default all-Java implementation can be extended for this purpose. In addition, an Apache Camel-based implementation is also available as an extension, which brings all the power of runtime changes and adaptation.Workflow is one of the pluggable aspects of Apache Syncope: this lets every deployment choose the preferred engine from a provided list - including the one based on Flowable BPM, the reference open source BPMN 2.0 implementation - or define new, custom ones.

Persistence manages all data (users, groups, attributes, resources, …) at a high level using a standard JPA 2.0 approach. The data is persisted to an underlying database, referred to as Internal Storage . Consistency is ensured via the comprehensive transaction management provided by the Spring Framework.

Globally, this offers the ability to easily scale up to a million entities and at the same time allows great portability with no code changes: MySQL, MariaDB, PostgreSQL, Oracle and MS SQL Server are fully supported deployment options.Security defines a fine-grained set of entitlements which can be granted to administrators, thus enabling the implementation of delegated administration scenarios

Third-party applications are provided full access to IdM services by leveraging the REST interface, either via the Java SyncopeClient library (the basis of Admin UI, End-user UI and CLI) or plain HTTP calls.

ConnId

The Provisioning layer relies on ConnId; ConnId is designed to separate the implementation of an application from the dependencies of the system that the application is attempting to connect to.

ConnId is the continuation of The Identity Connectors Framework (Sun ICF), a project that used to be part of market leader Sun IdM and has since been released by Sun Microsystems as an Open Source project. This makes the connectors layer particularly reliable because most connectors have already been implemented in the framework and widely tested.

The new ConnId project, featuring contributors from several companies, provides all that is required nowadays for a modern Open Source project, including an Apache Maven driven build, artifacts and mailing lists. Additional connectors – such as for SOAP, CSV, PowerShell and Active Directory – are also provided.

Syncope Concepts

https://cwiki.apache.org/confluence/display/SYNCOPE/Concepts

A little insight to Syncope internals.

Resources can be synchronized with external repositories

Resources are used both for synchronization and for propagation (i.e. inbound and outbound changes); the two different use cases can be described like this, using the terms of Syncope:

- users (and roles, starting from release 1.1.0) stored in an external resource can be synchronized to Syncope using a connector instance

- users (and roles, starting from release 1.1.0) stored in Syncope can be propagated to external resources using connector instances.

Syncope manual

http://syncope.apache.org/docs/reference-guide.html#introduction

Downloads

https://syncope.apache.org/downloads

https://cwiki.apache.org/confluence/display/SYNCOPE/Roadmap

PING IAM

https://www.pingidentity.com/en/resources/identity-fundamentals/identity-and-access-management.html

https://www.pingidentity.com/en.htm

https://www.pingidentity.com/en/solutions.html

https://www.pingidentity.com/en/platform.html

COMPARE Ping and WSO2 IAM

https://www.gartner.com/reviews/market/access-management/compare/ping-identity-vs-wso2inc

WSO2 IAM

https://wso2.com/what-is-identity-access-management/

IAM has its challenges too. User access management, application, containers, microservices, and integration spaces have grown in complexity and become more decentralized. As a result, complexities arise for identity silos, securing an increasing number of APIs and endpoints, account management, user password maintenance, regulatory compliance (such as GDPR and CCPA), and licensing and software costs when implementing IAM solutions.

In this scenario, an open source IAM provider such as WSO2 Identity Server helps to meet these challenges. WSO2 Identity Server aims to address both API and user domains while providing an enhanced user experience as part of WSO2’s Integration Agile Platform. It’s a highly extensible IAM product, designed to secure APIs and microservices and enable Customer Identity and Access Management (CIAM). Other key features include identity federation, SSO, strong and adaptive authentication, and privacy compliance. WSO2 Identity Server is a single solution for common identity requirements, in contrast to most identity platforms.

https://wso2.com/whitepapers/a-guide-to-wso2-identity-server/

https://wso2.com/identity-and-access-management/

https://wso2.com/identity-server/#Identity-Server-7-Release-Webinar

KeyCloak - Open Source Identity and Access Management w SSO

https://www.keycloak.org/documentation

https://www.keycloak.org/guides

Open Source Identity and Access Management

Add authentication to applications and secure services with minimum effort.

No need to deal with storing users or authenticating users.

Keycloak provides user federation, strong authentication, user management, fine-grained authorization, and more.

Implement SSO and a Keycloak server as the OpenID access control server - https://www.keycloak.org/

Single Signon

Users authenticate with Keycloak rather than individual applications. This means that your applications don't have to deal with login forms, authenticating users, and storing users. Once logged-in to Keycloak, users don't have to login again to access a different application.

This also applies to logout. Keycloak provides single-sign out, which means users only have to logout once to be logged-out of all applications that use Keycloak.

Identity Brokering and Social Login

Enabling login with social networks is easy to add through the admin console. It's just a matter of selecting the social network you want to add. No code or changes to your application is required.

Keycloak can also authenticate users with existing OpenID Connect or SAML 2.0 Identity Providers. Again, this is just a matter of configuring the Identity Provider through the admin console.

User Federation

Keycloak has built-in support to connect to existing LDAP or Active Directory servers. You can also implement your own provider if you have users in other stores, such as a relational database.

Admin Console

Through the admin console administrators can centrally manage all aspects of the Keycloak server.

They can enable and disable various features. They can configure identity brokering and user federation.

They can create and manage applications and services, and define fine-grained authorization policies.

They can also manage users, including permissions and sessions.

Account Management Console

Through the account management console users can manage their own accounts. They can update the profile, change passwords, and setup two-factor authentication.

Users can also manage sessions as well as view history for the account.

If you've enabled social login or identity brokering users can also link their accounts with additional providers to allow them to authenticate to the same account with different identity providers.

Standard Protocols

Keycloak is based on standard protocols and provides support for OpenID Connect, OAuth 2.0, and SAML.

Authorization Services

If role based authorization doesn't cover your needs, Keycloak provides fine-grained authorization services as well. This allows you to manage permissions for all your services from the Keycloak admin console and gives you the power to define exactly the policies you need.

Introduction to Keycloak. - medium

Keycloak is an open-source identity and access management tool with a focus on modern applications such as single-page applications, mobile applications, and REST APIs.

Keycloak uses its own user database. You can also integrate with existing user directories, such as Active Directory and LDAP servers.

Realm >> create a realm for your application and users. A realm is fully isolated (in terms of configuration, users, roles, etc.) from other realms

Client >> Clients are entities that can request Keycloak to authenticate a user.

Client Scope: allows creating re-usable groups of claims that are added to tokens issued to a client

roles for RBAC

identity providers >> Keycloak has built-in support for OpenID Connect and SAML 2.0 as well as a number of social networks such as Google, GitHub, Facebook and Twitter

user federation >>. capability of integrating with external identity stores.

authentication process >> sequential steps or executions that are grouped together in order to verify the user identity. You can customize this followed by copying existing built-in flows or, changing flow priorities

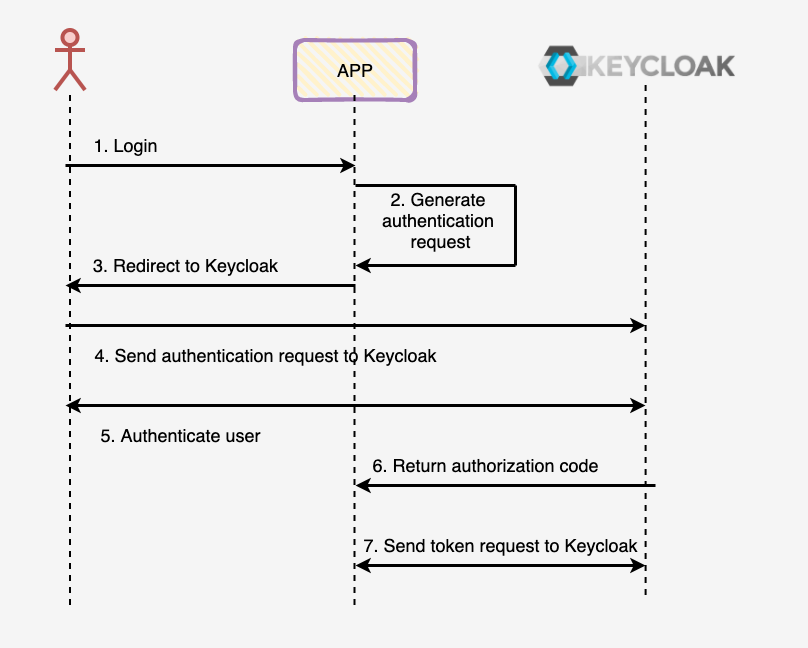

OIDC authentication in keycloak >> authenticate a user with Keycloak is through the OpenID Connect authorization code flow.

OKTA IAM Solutions

Okta gives you a neutral, powerful and extensible platform that puts identity at the heart of your stack. No matter what industry, use case, or level of support you need, we’ve got you covered.

https://www.okta.com/products/api-access-management

https://www.okta.com/resources/content-library/?type=datasheet

https://www.okta.com/resources/podcasts/mistaken-identity/

https://www.okta.com/webinars/hub/

https://www.okta.com/resources/content-library

Okta-IAMBuyersGuide-Whitepaper-20230215-Final.pdf. link

Okta-IAMBuyersGuide-Whitepaper-20230215-Final.pdf. file

2 The stakes are high

5 The impact is pervasive

7 How to get it right

8 Attribute 1: Neutral and independent

10 Attribute 2: Customization

12 Attribute 3: Ease of use

14 Attribute 4: Reliable and secure

16 Conclusion

https://www.okta.com/customers/

ZUUL IAM

ZULL Wiki

https://github.com/Netflix/zuul/wiki

Zuul 2.x

Open-Sourcing Zuul article

https://medium.com/netflix-techblog/open-sourcing-zuul-2-82ea476cb2b3

We are excited to announce the open sourcing of Zuul 2, Netflix’s cloud gateway. We use Zuul 2 at Netflix as the front door for all requests coming into Netflix’s cloud infrastructure. Zuul 2 significantly improves the architecture and features that allow our gateway to handle, route, and protect Netflix’s cloud systems, and helps provide our 125 million members the best experience possible. The Cloud Gateway team at Netflix runs and operates more than 80 clusters of Zuul 2, sending traffic to about 100 (and growing) backend service clusters which amounts to more than 1 million requests per second. Nearly all of this traffic is from customer devices and browsers that enable the discovery and playback experience you are likely familiar with.

This post will overview Zuul 2, provide details on some of the interesting features we are releasing today, and discuss some of the other projects that we’re building with Zuul 2.

How Zuul 2 Works

For context, here’s a high-level diagram of Zuul 2’s architecture:

The Netty handlers on the front and back of the filters are mainly responsible for handling the network protocol, web server, connection management and proxying work. With those inner workings abstracted away, the filters do all of the heavy lifting. The inbound filters run before proxying the request and can be used for authentication, routing, or decorating the request. The endpoint filters can either be used to return a static response or proxy the request to the backend service (or origin as we call it). The outbound filters run after a response has been returned and can be used for things like gzipping, metrics, or adding/removing custom headers.

Zuul’s functionality depends almost entirely on the logic that you add in each filter. That means you can deploy it in multiple contexts and have it solve different problems based on the configurations and filters it is running.

We use Zuul at the entrypoint of all external traffic into Netflix’s cloud services and we’ve started using it for routing internal traffic, as well. We deploy the same core but with a substantially reduced amount of functionality (i.e. fewer filters). This allows us to leverage load balancing, self service routing, and resiliency features for internal traffic.

Open Source

The Zuul code that’s running today is the most stable and resilient version of Zuul yet. The various phases of evolving and refactoring the codebase have paid dividends and we couldn’t be happier to share it with you.

Today we are releasing many core features. Here are the ones we’re most excited about:

Server Protocols

- HTTP/2 — full server support for inbound HTTP/2 connections

- Mutual TLS — allow for running Zuul in more secure scenarios

Resiliency Features

- Adaptive Retries — the core retry logic that we use at Netflix to increase our resiliency and availability

- Origin Concurrency Protection — configurable concurrency limits to protect your origins from getting overloaded and protect other origins behind Zuul from each other

Operational Features

- Request Passport — track all the lifecycle events for each request, which is invaluable for debugging async requests

- Status Categories — an enumeration of possible success and failure states for requests that are more granular than HTTP status codes

- Request Attempts — track proxy attempts and status of each, particularly useful for debugging retries and routing

We are also working on some features that will be coming soon, including:

- Websocket/SSE — support for side-channel push notifications

- Throttling and rate-limiting — protection from malicious client connections and requests, helping defend against volumetric attacks

- Brownout filters — for disabling certain CPU-intensive features when Zuul is overloaded

- Configurable routing — file-based routing configuration, instead of having to create routing filters in Zuul

We would love to hear from you and see all the new and interesting applications of Zuul. For instructions on getting started, please visit our wiki page.

Step-by-step guide for Example

sample code block