m GCP - Google Cloud

Key Points

gcp services match apache open-source projects

10 reasons why GCP is a good cloud provider

References

Key Concepts

Potential Value Opportunities

Potential Challenges

Tracking Costs of File Serving vs Larger App Sizes

wes [8:19 AM]

@jake @piotr.s.brainhub @jvila

Give me your thoughts on this:

Recently (last week) I made updates to both inventory and vehicle-info services related to the local cache for decoded vehicles.

We had a file that was previously hosted in Google Cloud and it was moved directly to the repo so the files could be deployed with the app directly.

While I’d prefer to have the file hosted, the costs of bandwidth transfer from Google Cloud skyrocketed and we had no choice but to change that to stop the bleeding of costs there temporarily. I will be evaluating other storage options that we can pull down a 350-400MB file upon start of every instance of inventory and vehicle-info, but we have about 30 instances across both of those that run… and that is in every space in every region. So, 350MB file being downloaded 90 times when we deploy those two services with any PR merge, etc… and that’s just D1.

So - I moved the files as split, compressed files to the repo and deploy with the app.

Works great, runs fast… but we now have that storage in the app.

I did not increase the disk_quota for these services when that change was made.

We currently have the default disk_quota for file storage of every container set… which is 1GB.

Running into heap allocation issues

This may be due to the fact that disk_quota was not increased after moving the gzipped decoded vehicle files to the service.

They are about 350MB in compressed size, but the process to uncompress the files with piped streams may be placing too much burden on the existing 1GB of disk_quota (the uncompressed size of the files are ~2.5GB).

The disk storage seems to be okay with just the deployed files (570MB of the 1GB).

However, the 350MB that is the sum of the aggregated split files is just the compressed size.

When expanded, it is about 2.5GB.

I am not actually expanding the file and saving it to the disk.

It is working on a piped stream that has the uncompressed data being piped.

Regardless, I’m seeing heap allocation issues in vehicle-info. It also seems to be happening during the startup script which is when the cache is built from the compressed files.

I am going to increase the size of the disk_quota to see if that gives any breathing room for virtual memory to solve the heap allocation issue I’m seeing, but this is really just my first guess.

Any thoughts?

@piotr.s.brainhub haven’t we had these changes running for a week already without issue? That’s what confusing if this is actually related to the issue i’m describing above. (edited)

Candidate Solutions

CloudEvents standard

https://github.com/cloudevents/spec/blob/master/primer.md

The source generates a message where the event is encapsulated in a protocol. The event arrives to a destination, triggering an action which is provided with the event data.

A source is a specific instance of a source-type which allows for staging and test instances. Open source software of a specific source-type may be deployed by multiple companies or providers.

Events can be delivered through various industry standard protocols (e.g. HTTP, AMQP, MQTT, SMTP), open-source protocols (e.g. Kafka, NATS), or platform/vendor specific protocols (AWS Kinesis, Azure Event Grid).

An action processes an event defining a behavior or effect which was triggered by a specific occurrence from a specific source. While outside of the scope of the specification, the purpose of generating an event is typically to allow other systems to easily react to changes in a source that they do not control. The source and action are typically built by different developers. Often the source is a managed service and the action is custom code in a serverless Function (such as AWS Lambda or Google Cloud Functions).

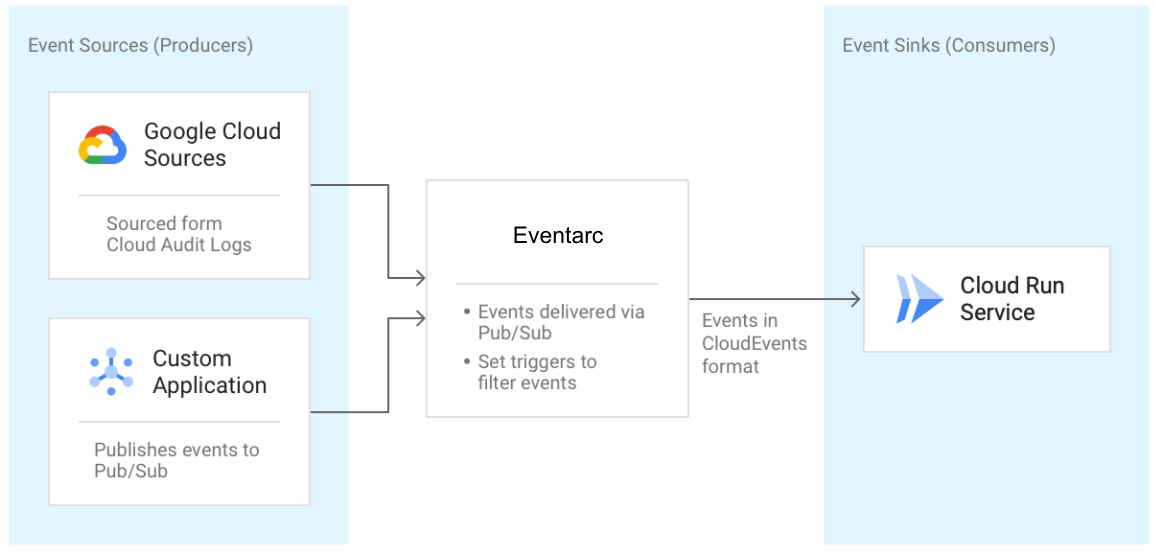

Eventarc notifications for Google Cloud Run serverless functions for automation

https://www.infoq.com/news/2020/11/eventarc-google-cloud-run/

customers can use Eventarc to address use cases such as video analysis, file conversion, new user signup, application monitoring, and hundreds of others by acting on events that originate from Cloud Storage, BigQuery, Firestore, and more than 60 other Google Cloud sources. Eventarc supports:

- Receiving events from 60+ Google Cloud sources (via Cloud Audit logs)

- Receiving events from custom sources by publishing to Pub/Sub – customer's code can send events to signal between microservices

- Adhere to the CloudEvents standard for all events, regardless of source, to ensure a consistent developer experience

- On-demand scalability and no minimum fees

The underlying delivery mechanism in Eventarc is Pub/Sub, and topics and subscriptions. Event sources produce events and publish them on the Pub/Sub topic in any format. Subsequently, the events are delivered to the Google Run sinks. Developers can use Eventarc for applications running on Cloud Run to use a Cloud Storage event (via Cloud Audit Logs) to trigger a data processing pipeline or an event from custom sources (publishing to Cloud Pub/Sub) to signal between microservices.

Source: https://codelabs.developers.google.com/codelabs/cloud-run-events#2

Step-by-step guide for Example

sample code block