Key Points

References

Key Concepts

Event Stream Use Case on Logical Value Chain connects across networks using GEMS

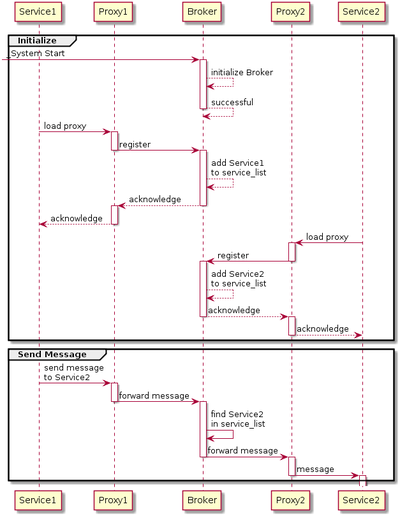

What software is needed to implement the event message subscription scenario defined below?

ESB (Enterprise Service Bus) vs Message Broker

https://stackoverflow.com/questions/3280576/how-does-rabbitmq-compare-to-mule

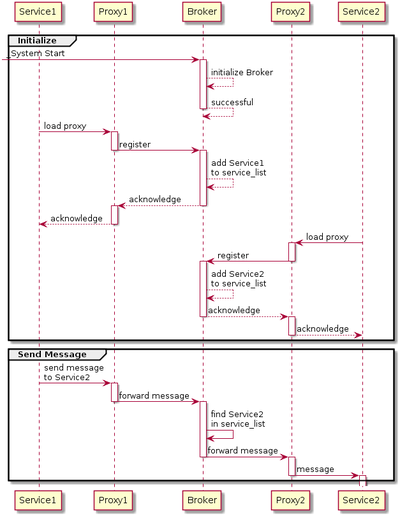

Mule is an ESB (Enterprise Service Bus). RabbitMQ is a message broker.

An ESB provides added layers atop a message broker such as routing, transformations and business process management. It is a mediator between applications, integrating Web Services, REST endpoints, database connections, email and ftp servers - you name it. It is a high-level integration backbone which orchestrates interoperability within a network of applications that speak different protocols.

A message broker is a lower level component which enables you as a developer to relay raw messages between publishers and subscribers, typically between components of the same system but not always. It is used to enable asynchronous processing to keep response times low. Some tasks take longer to process and you don't want them to hold things up if they're not time-sensitive. Instead, post a message to a queue (as a publisher) and have a subscriber pick it up and process it "later".

ESBs include: IBM IIB, Mule, Servicemix ( for Java JBI clients ) etc

Message Brokers include: IBM MQ, ActiveMQ, RabbitMQ, Kafka etc

While ESB tools are a reliable way to connect applications, their technology is becoming more obsolete as the cloud dominates digital ecosystems and as companies experience a rate of growth that requires even faster adaptability across their systems.

ESB comeback?

unless you are running everything on a single cloud platform and have low needs for integration with customers, vendors and partners, an ESB ( or Event Bus ) may speed integration. If everything you need is on your cloud platform you are probably fine using just what AWS, Azure etc offer as commercial services.

Kafka as a Message Broker

https://hackernoon.com/introduction-to-message-brokers-part-1-apache-kafka-vs-rabbitmq-8fd67bf68566

Kafka can be used as a message broker by dynamically routing messages to a single listener and, if not handled, re-route to a next listener

Routed messages are set to no longer available on the queue to avoid sending the same message twice to different handlers

Java Spring Boot app using Kafka as message broker tutorial

https://developer.okta.com/blog/2019/11/19/java-kafka

kafka-java-tutorial-developer.okta.com-Kafka with Java Build a Secure Scalable Messaging App.pdf

ActiveMQ

https://activemq.apache.org/

Apache ActiveMQ® is the most popular open source, multi-protocol, Java-based message broker. It supports industry standard protocols so users get the benefits of client choices across a broad range of languages and platforms. Connect from clients written in JavaScript, C, C++, Python, .Net, and more. Integrate your multi-platform applications using the ubiquitous AMQP protocol. Exchange messages between your web applications using STOMP over websockets. Manage your IoT devices using MQTT. Support your existing JMS infrastructure and beyond. ActiveMQ offers the power and flexibility to support any messaging use-case.

High-performance, non-blocking architecture for the next generation of messaging applications.

- JMS 1.1 & 2.0 + Jakarta Messaging 2.0 & 3.0 with full client implementations including JNDI

- High availability using shared storage or network replication

- Simple & powerful protocol agnostic addressing model

- Flexible clustering for distributing load

- Advanced journal implementations for low-latency persistence as well as JDBC

- High feature parity with ActiveMQ "Classic" to ease migration

- Asynchronous mirroring for disaster recovery

- Data Driven Load Balance

Using Artemis with Spring Framework

http://www.masterspringboot.com/messaging/artemismq/jms-messaging-with-spring-boot-and-artemis-mq/

JMS Messaging with Spring Boot and Artemis MQ

http://www.masterspringboot.com/messaging/artemismq/jms-messaging-with-spring-boot-and-artemis-mq/

use Spring Boot to create and consume JMS messages using Artemis MQ broker.

In this example we will start an embedded Artemis MQ server as part of our application and start sending and consuming messages. Apache Artemis MQ is an open source message broker written in Java together with a full Java Message Service (JMS) client.

ActiveMQ Artemis with Spring Boot on Kubernetes

https://www.springcloud.io/post/2022-08/activemq-artemis-spring-boot-k8s/#gsc.tab=0

This article will teach you how to run ActiveMQ on Kubernetes and integrate it with your app through Spring Boot. We will deploy a clustered ActiveMQ broker using a dedicated operator. Then we are going to build and run two Spring Boot apps. The first of them is running in multiple instances and receiving messages from the queue, while the second is sending messages to that queue. In order to test the ActiveMQ cluster, we will use Kind. The consumer app connects to the cluster using several different modes. We will discuss those modes in detail.

ActiveMQ Concepts

https://www.datadoghq.com/blog/activemq-architecture-and-metrics/#:~:text=Both%20ActiveMQ%20versions%20are%20capable,to%20the%20topic%20(in%20ActiveMQ

The ActiveMQ broker routes each message through a messaging endpoint called a destination (in ActiveMQ Classic) or an address (in Artemis). Both ActiveMQ versions are capable of point-to-point messaging—in which the broker routes each message to one of the available consumers in a round-robin pattern—and publish/subscribe (or “pub/sub”) messaging—in which the broker delivers each message to every consumer that is subscribed to the topic (in ActiveMQ Classic) or address (in ActiveMQ Artemis).

As shown above, Classic sends point-to-point messages via queues and pub/sub messages via topics. Artemis, on the other hand, uses queues to support both types of messaging and uses routing types to impose the desired behavior. In the case of point-to-point messaging, the broker sends a message to an address configured with the anycast routing type, and the message is placed into a queue where it will be retrieved by a single consumer. (Any anycast address typically has a single queue, but it can contain multiple queues if necessary, for example to support a cluster of ActiveMQ servers.) In the case of pub/sub messaging, the address contains a queue for each topic subscription and the broker uses the multicast routing type to send a copy of each message to each subscription queue.

ActiveMQ implements the functionality specified in the Java Message Service (JMS) API, which defines a standard for creating, sending, and receiving messages. ActiveMQ client applications—producers and consumers—written in Java can use the JMS API to send and receive messages. Additionally, both Classic and Artemis support non-JMS clients written in Node.js, Ruby, PHP, Python, and other languages, which can connect to the ActiveMQ broker via the AMQP, MQTT, and STOMP protocols.

Artemis easier to maintain, which is important if you're basing a commercial product on it. The smaller feature set means a smaller overall implementation, which fits well with developing microservices.

Implementing Apache ActiveMQ-style broker meshes with Apache Artemis

https://developers.redhat.com/articles/2021/06/30/implementing-apache-activemq-style-broker-meshes-apache-artemis

Artemis easier to maintain, which is important if you're basing a commercial product on it. The smaller feature set means a smaller overall implementation, which fits well with developing microservices.

This article describes subtleties that can lead to lost messages in an Artemis active-active mesh. That architecture consists of multiple message brokers interconnected in a mesh, each broker with its own message storage, where all are simultaneously accepting messages from publishers and distributing them to subscribers. ActiveMQ and Artemis use different policies for message distribution. I will explain the differences and show a few ways to make Artemis work more like ActiveMQ in an active-active scenario.

Artemis supports broker discovery for message meshes

The final vital piece of configuration assembles the various broker connectors into a mesh. Artemis provides various discovery mechanisms by which brokers can find one another in the network. However, if you're more familiar with ActiveMQ, you're probably used to specifying the mesh members explicitly.

Artemis provides a number of discovery mechanisms, allowing clients to determine the network topology without additional configuration. These don't work with all wire protocols (notably, there is no discovery mechanism for Advanced Message Queuing Protocol), and ActiveMQ users are probably familiar with configuring the client's connection targets explicitly. The usual mechanism is to list all the brokers in the mesh in the client's connection URI.

you should be able to connect consumers to all the nodes, produce messages to any node, and have them routed to the appropriate consumer. However, this mesh won't behave exactly like ActiveMQ, because Artemis mesh operation is not governed by client demand.

Message forwarding in Artemis is more flexible than Classic ActiveMQ

In ActiveMQ, network connectors are described as "demand forwarding." This means that messages are accepted on a particular broker and remain there until a particular client requests them. If there are no clients for a particular queue, messages remain on the original broker until that situation changes.

On Artemis, forwarding behavior is controlled by the brokers, and is only loosely associated with client load. In the previous section's configuration, I set message-load-balancing=ON_DEMAND. This instructs the brokers not to forward messages for specific queues to brokers where there are, at present, no consumers for those queues. So if there are no consumers connected at all, the routing behavior is similar to that of ActiveMQ: Messages will accumulate on the broker that originally received them. If I had set message-load-balancing=STRICT, the receiving broker would have divided the messages evenly between the brokers that defined that queue. With this configuration, the presence or absence of clients should be irrelevant ... except it isn't quite that simple, and the complications are sometimes important.

ActiveMQ Differences Topics and Queues

https://activemq.apache.org/how-does-a-queue-compare-to-a-topic

Topics

In JMS a Topic implements publish and subscribe semantics. When you publish a message it goes to all the subscribers who are interested - so zero to many subscribers will receive a copy of the message. Only subscribers who had an active subscription at the time the broker receives the message will get a copy of the message.

Queues

A JMS Queue implements load balancer semantics. A single message will be received by exactly one consumer. If there are no consumers available at the time the message is sent it will be kept until a consumer is available that can process the message. If a consumer receives a message and does not acknowledge it before closing then the message will be redelivered to another consumer. A queue can have many consumers with messages load balanced across the available consumers.

So Queues implement a reliable load balancer in JMS.

Rabbit MQ

https://www.rabbitmq.com/

VMware provides support for open source RabbitMQ, available for a subscription fee.

RabbitMQ can run in cloud environments

RabbitMQ Tutorials ***

https://www.rabbitmq.com/getstarted.html

https://en.wikipedia.org/wiki/RabbitMQ

RabbitMQ is an open-source message-broker software (sometimes called message-oriented middleware) that originally implemented the Advanced Message Queuing Protocol (AMQP) and has since been extended with a plug-in architecture to support Streaming Text Oriented Messaging Protocol (STOMP), MQ Telemetry Transport (MQTT), and other protocols.[1]

Written in Erlang, the RabbitMQ server is built on the Open Telecom Platform framework for clustering and failover. Client libraries to interface with the broker are available for all major programming languages. The source code is released under the Mozilla Public License.

Message Broker

A message broker (also known as an integration broker or interface engine[1]) is an intermediary computer program module that translates a message from the formal messaging protocol of the sender to the formal messaging protocol of the receiver. Central reasons for using a message-based communications protocol include its ability to store (buffer), route, or transform messages while conveying them from senders to receivers.

The following represent other examples of actions that might be handled by the broker:[2][3]

- Route messages to one or more destinations

- Transform messages to an alternative representation

- Perform message aggregation, decomposing messages into multiple messages and sending them to their destination, then recomposing the responses into one message to return to the user

- Interact with an external repository to augment a message or store it

- Invoke web services to retrieve data

- Respond to events or errors

- Provide content and topic-based message routing using the publish–subscribe pattern

The Advanced Message Queuing Protocol (AMQP)

AMQP is an open standard application layer protocol for message-oriented middleware. The defining features of AMQP are message orientation, queuing, routing (including point-to-point and publish-and-subscribe), reliability and security.[1]

AMQP mandates the behavior of the messaging provider and client to the extent that implementations from different vendors are interoperable,

The basic unit of data in the AMQP link protocol is a frame. There are nine AMQP frame bodies defined that are used to initiate, control and tear down the transfer of messages between two peers. These are:

- open (the connection)

- begin (the session)

- attach (the link)

- transfer

- flow

- disposition

- detach (the link)

- end (the session)

- close (the connection)

The link protocol is at the heart of AMQP.

Streaming Text Oriented Message Protocol (STOMP)

Simple (or Streaming) Text Oriented Message Protocol (STOMP), formerly known as TTMP, is a simple text-based protocol, designed for working with message-oriented middleware (MOM). It provides an interoperable wire format that allows STOMP clients to talk with any message broker supporting the protocol.

MQTT is a lightweight, publish-subscribe network protocol that transports messages between devices. The protocol usually runs over TCP/IP, however, any network protocol that provides ordered, lossless, bi-directional connections can support MQTT.[1] It is designed for connections with remote locations where resource constraints exist or the network bandwidth is limited. The protocol is an open OASIS standard and an ISO recommendation (ISO/IEC 20922).

Compare Kafka and Rabbit MQ

https://www.simplilearn.com/kafka-vs-rabbitmq-article

kafka-vs-rabbitmq-simplilearn-Kafka vs RabbitMQ What Are the Biggest Differences and Which Should You Learn.pdf

Message brokers are software modules that let applications, services, and systems communicate and exchange information. Message brokers do this by translating messages between formal messaging protocols, enabling interdependent services to directly “talk” with one another, even if they are written in different languages or running on other platforms.

Message brokers validate, route, store, and deliver messages to the designated recipients. The brokers operate as intermediaries between other applications, letting senders issue messages without knowing the consumers’ locations, whether they’re active or not, or even how many of them exist.

However, publish/Subscribe is a message distribution pattern that lets producers publish each message they want.

Data engineers and scientists refer to pub/sub as a broadcast-style distribution method, featuring a one-to-many relationship between the publisher and the consumers.

Kafka vs RabbitMQ Concepts

https://www.instaclustr.com/blog/rabbitmq-vs-kafka/

rabbitmq-vs-kafka-concepts-instaclustr-RabbitMQ vs Apache Kafka Key Differences and Use Cases.pdf

rabbitmq-vs-kafka-concepts-instaclustr-RabbitMQ vs Apache Kafka Key Differences and Use Cases.pdf

Event Buses

Mitt > for Node.js apps only to publish and listen to events

https://www.npmjs.com/package/mitt

// listen to an event

emitter.on('foo', e => console.log('foo', e) )

// listen to all events

emitter.on('*', (type, e) => console.log(type, e) )

// fire an event

emitter.emit('foo', { a: 'b' })

Simple Java Events - use Property Beans for objects in the SAME process - see groovy event example

ex-expando-events-gen-v1.groovy

m Groovy

Open-source event buses

https://levelup.gitconnected.com/overview-of-different-event-bus-implementations-f8e639994c7c

Java Message Service (JMS)

https://www.oracle.com/technical-resources/articles/java/intro-java-message-service.html

JMS is a Java-based messaging standard that defines a common interface for Java-based event bus implementations. It provides a common interface for Java-based event bus implementations.

features > async, portable, light weight, Java runtime only,

supports: P2P msgq, pub / sub

Apache Kafka

https://kafka.apache.org/intro

Apache Kafka is an open-source, highly scalable and fault-tolerant distributed event streaming platform that is often used as a high-performance event bus for big data and streaming applications. It provides low-latency and high-throughput processing capabilities. Kafka is often used for real-time data analytics, real-time monitoring, and real-time data processing. It provides a rich set of features for handling event streams, including built-in data compression, data partitioning, and event replication.

supports:

- To publish (write) and subscribe to (read) streams of events, including continuous import/export of your data from other systems.

- To store streams of events durably and reliably for as long as you want.

- To process streams of events as they occur or retrospectively.

Kafka is a distributed system consisting of servers and clients that communicate via a high-performance TCP network protocol.

RabbitMQ

https://www.rabbitmq.com/

RabbitMQ is an open-source messaging broker that implements the Advanced Message Queuing Protocol (AMQP). It is often used as an event bus for microservice architectures, where the need for loose coupling and easy scalability is important. RabbitMQ provides a flexible and reliable messaging infrastructure and supports a variety of programming languages and platforms.

RabbitMQ is lightweight and easy to deploy on premises and in the cloud. It supports multiple messaging protocols. RabbitMQ can be deployed in distributed and federated configurations to meet high-scale, high-availability requirements.

less scalable than Kafka

Microsoft Azure Event Grid

https://learn.microsoft.com/en-us/azure/event-grid/overview

Microsoft Azure Event Grid is a fully managed event routing service provided by Microsoft Azure. It provides a highly scalable and highly available event infrastructure for event-driven architectures and serverless applications. Event Grid supports a variety of event sources and can route events to multiple event handlers in real-time

it charges based on the number of events processed and the number of operations performed

may not provide the level of customization and flexibility required for some scenarios.

can limit interoperability with other platforms and technologies that use different event processing solutions.

Google Cloud Pub/Sub

https://cloud.google.com/pubsub

Google Cloud Pub/Sub is a fully managed messaging service provided by Google Cloud, that allows applications to publish and subscribe to messages in a scalable and reliable manner. Pub/Sub supports a variety of programming languages and platforms and provides powerful features for handling events, such as dead-letter topics, push and pull delivery, and message filtering.

it charges based on the number of messages processed and the amount of data stored.

can limit interoperability with other platforms and technologies

Amazon Simple Notification Service (SNS)

https://aws.amazon.com/sns/

Amazon Simple Notification Service (SNS) is a fully managed messaging service provided by Amazon Web Services (AWS), that enables applications to send and receive notifications. It provides a simple and scalable messaging infrastructure for event-driven applications. SNS supports a variety of event sources and can route events to multiple event handlers, including email, SMS, and other AWS services.

it charges based on the number of messages processed and the amount of data stored.

can limit interoperability with other platforms and technologies

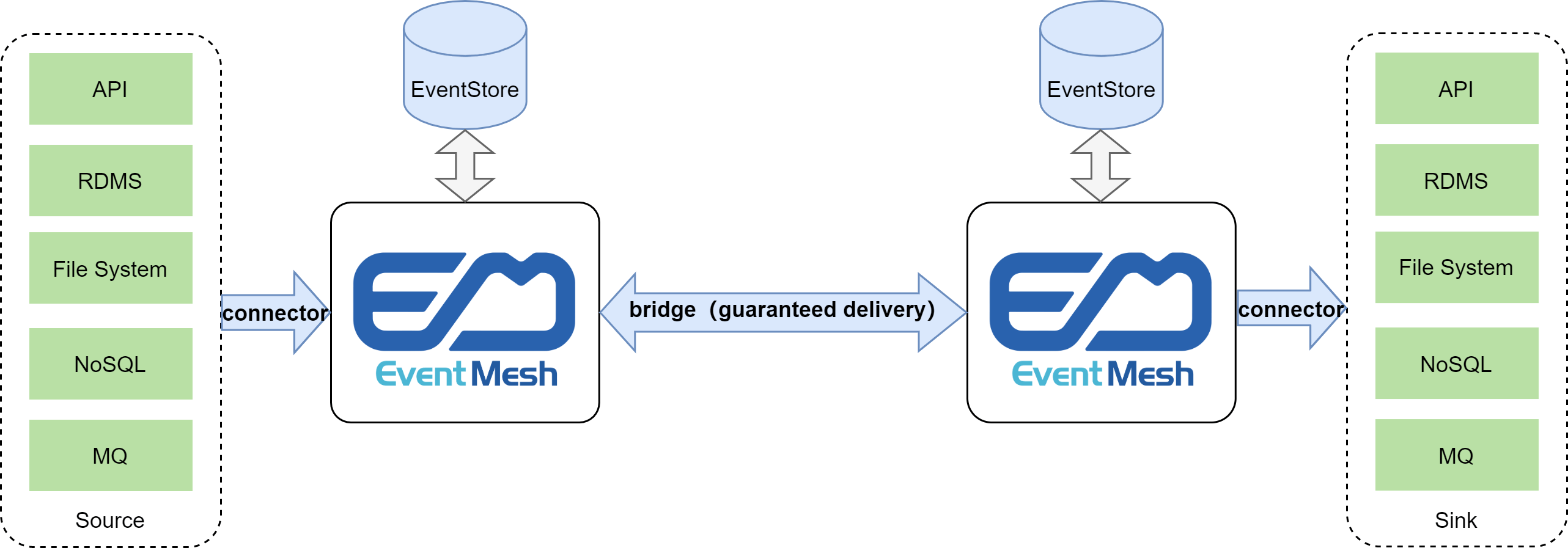

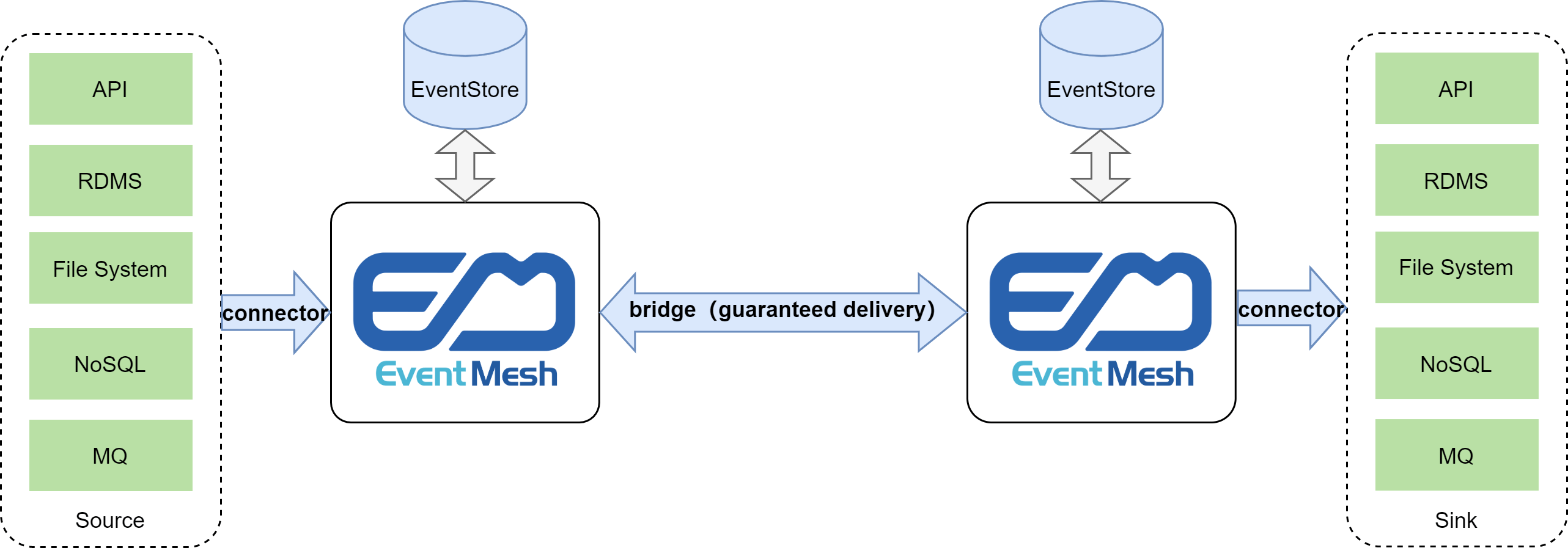

Apache EventMesh

https://github.com/apache/eventmesh

https://eventmesh.apache.org/

Event Mesh on Low-code App Development Solutions swtc page

Apache EventMesh is a new generation serverless event middleware for building distributed event-driven applications.

Apache EventMesh itself has four primary components:

- eventmesh-runtime : an middleware to transmit events between event producers and consumers, support cloud native apps and microservices.

- eventmesh-sdk-java : currently supports HTTP and TCP protocols.

- eventmesh-connector-api : an api layer based on OpenMessaging api and SPI pluggin, which can be implemented by popular EventStores such as IMDG, Messaging Engine and OSS etc.

- eventmesh-connector-rocketmq : an implementation of eventmesh-connector-api, pub event to or sub event from RocketMQ as EventStore.

Apache EventMesh provides standard protocol such as cloud events; interface such as http, tcp etc.; and pluggable storage engine with the Apache RocketMQ as the default back-end storage. Compared to other mesh-based applications in the market, our platform supports fancy features like extreme low latency and stability, and cloud-native architecture

need to address complexity brought in by heavy clients based on the pull model; event-based streaming; and, the openness of event metadata and mixed media storage

Apache EventMesh has a vast amount of features to help users achieve their goals. Let us share with you some of the key features EventMesh has to offer:

- Built around the CloudEvents specification.

- Rapidty extendsible interconnector layer such as the source or sink of Saas, CloudService, and Database etc.

- Rapidty extendsible storage layer such as Apache RocketMQ, Apache Kafka, Apache Pulsar, RabbitMQ, Redis, Pravega, and RDMS(in progress) using JDBC.

- Rapidty extendsible controller such as Consul, Nacos, ETCD and Zookeeper.

- Guaranteed at-least-once delivery.

- Deliver events between multiple EventMesh deployments.

- Event schema management by catalog service.

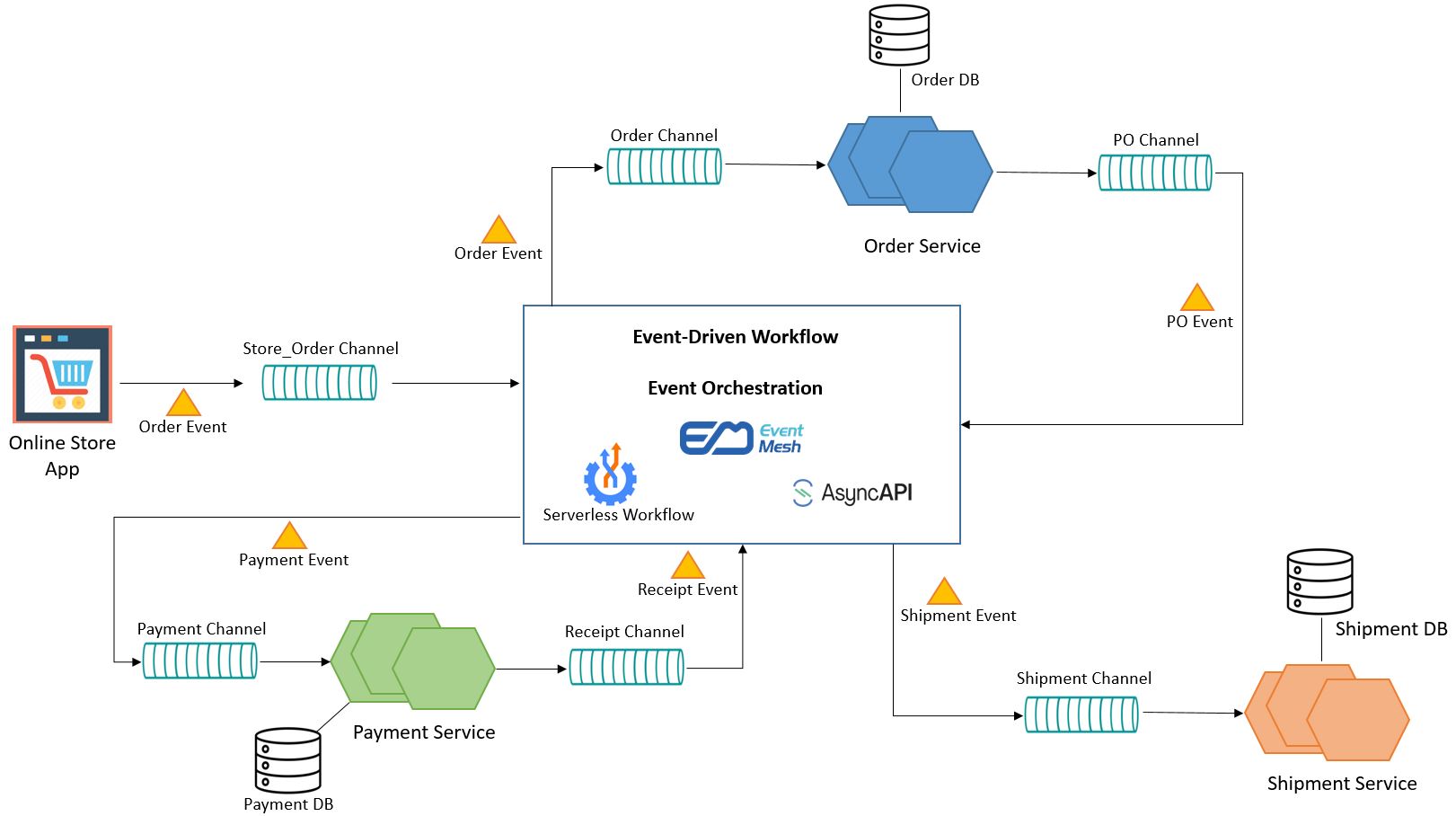

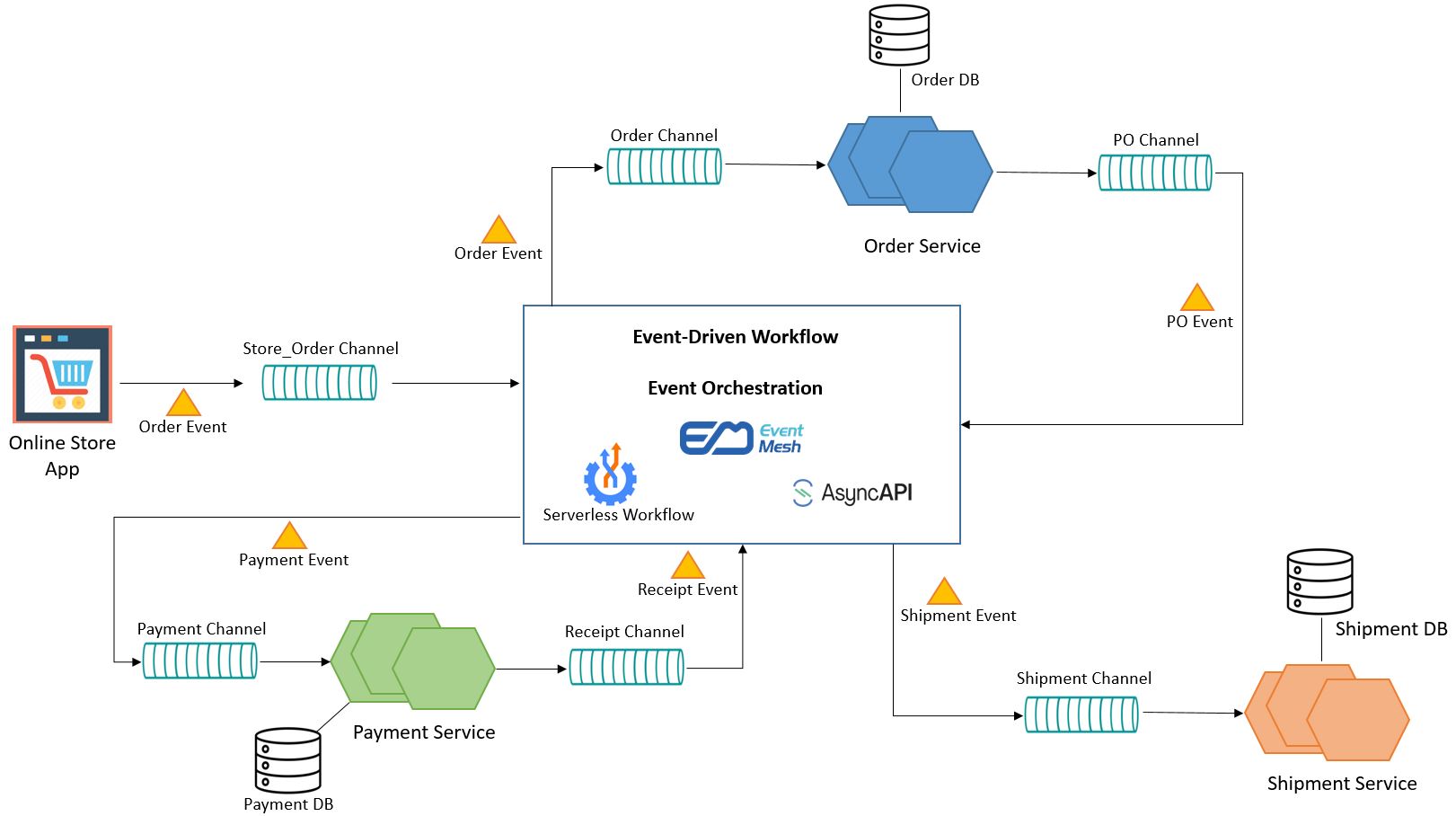

- Powerful event orchestration by Serverless workflow engine.

- Powerful event filtering and transformation.

- Rapid, seamless scalability.

- Easy Function develop and framework integration

Event Mesh - open-source cloud-native eventing infrastructure that decouples the application and backend middleware layer,

http://eventmesh.incubator.apache.org/

Features

- Communication Protocol: EventMesh could communicate with clients with TCP, HTTP, or gRPC.

- CloudEvents: EventMesh supports the CloudEvents specification as the format of the events. CloudEvents is a specification for describing event data in common formats to provide interoperability across services, platforms, and systems.

- Schema Registry: EventMesh implements a schema registry that receives and stores schemas from clients and provides an interface for other clients to retrieve schemas.

- Observability: EventMesh exposed a range of metrics, such as the average latency of the HTTP protocol and the number of delivered messages. The metrics could be collected and analyzed with Prometheus or OpenTelemetry.

- Event Workflow Orchestration: EventMesh Workflow could receive an event and decide which command to trigger next based on the workflow definitions and the current workflow state. The workflow definition could be written with the Serverless Workflow DSL.

Components

Apache EventMesh (Incubating) consists of multiple components that integrate different middlewares and messaging protocols to enhance the functionalities of the application runtime.

- eventmesh-runtime: The middleware that transmits events between producers and consumers, which supports cloud-native apps and microservices.

- eventmesh-sdk-java: The Java SDK that supports HTTP, TCP, andgRPCprotocols.

- eventmesh-sdk-go: The Golang SDK that supports HTTP, TCP, andgRPCprotocols.

- eventmesh-connector-plugin: The collection of plugins that connects middlewares such asApache RocketMQ(implemented)Apache Kafka(in progress),Apache Pulsar(in progress), andRedis(in progress).

- eventmesh-registry-plugin: The collection of plugins that integrate service registries such asNacosandetcd.

- eventmesh-security-plugin: The collection of plugins that implement security mechanisms, such as ACL (access control list), authentication, and authorization.

- eventmesh-protocol-plugin: The collection of plugins that implement messaging protocols, such asCloudEventsandMQTT.

- eventmesh-admin: The control plane that manages clients, topics, and subscriptions.

Event Mesh Solution Example

https://eventmesh.incubator.apache.org/docs/design-document/workflow

https://eventmesh.incubator.apache.org/docs/roadmap

Event Mesh architecture

https://github.com/apache/incubator-eventmesh

Apache EventMesh (Incubating) is a dynamic event-driven application multi-runtime used to decouple the application and backend middleware layer, which supports a wide range of use cases that encompass complex multi-cloud, widely distributed topologies using diverse technology stacks.

EventMesh roadmap

https://eventmesh.apache.org/docs/roadmap/

Future features not yet implemented

Docker EventMesh quickstart

docker-version guidelines for you if you prefer Docker:

Step 1: Deploy eventmesh-store using docker

Step 2: Start eventmesh-runtime using docker

Step 3: Run our demos

EventMesh documentation

Apache EventMesh Documentation

Apache EventMesh (Incubating) has a vast amount of features to help users achieve their goals. Let us share with you some of the key features EventMesh has to offer:

- Built around the CloudEvents specification.

- Rapidly extensible language sdk around gRPC protocols.

- Rapidly extensible middleware by connectors such as Apache RocketMQ, Apache Kafka, Apache Pulsar, RabbitMQ, Redis, Pravega, and RDMS(in progress) using JDBC.

- Rapidly extensible controller such as Consul, Nacos, ETCD and Zookeeper.

- Guaranteed at-least-once delivery.

- Deliver events between multiple EventMesh deployments.

- Event schema management by catalog service.

- Powerful event orchestration by Serverless workflow engine.

- Powerful event filtering and transformation.

- Rapid, seamless scalability.

- Easy Function develop and framework integration.

EventMesh at Webank

https://thestack.technology/apache-event-mesh/

Node-RED IoT event message framework with custom JS functions

https://nodered.org/

Low-code programming for event-driven applications for Node.js apps

https://opensource.com/life/16/5/getting-started-node-red

Node-Red prebuilt

https://flows.nodered.org/

-------------------------------

data services - Node-RED

-------------------------------

Node-RED basics video

https://www.youtube.com/watch?v=3AR432bguOY

Node-RED best pracices video

https://www.youtube.com/watch?v=V0SmNcIYCtQ

m Apache Data Services#ApachePulsar

https://pulsar.apache.org/

Solace Message Service and Event Hub with EDA - Event Driven Architecture ( compare to EventMesh, Kafka, CouchDb )

https://solace.com/

Solace master data management with event mesh pub sub

https://solace.com/solutions/real-time-master-data-management/

Key Questions for Solace

- isolation > how to isolate client usage from Solace directly using wrappers or adapters to minimize future migration costs

- sustainability > how sustainable is a Solace implementation? economics, technology currency, adaptability, governance, integrations, migration?

- capability as GEMS > will Solace meet all capabilities for a GEMS - Global Event Management System except isolation and sustainability?

TOC

Introduction.

The Service Decomposition Paradox..

The Integration Conundrum ..

The Incredible Dancing Microservices.

The Copernican Shift to Events

Realizing the Agility Provided by a Modern Central

Nervous System.

Think Event-Driven and Choreograph Service Execution.. 15

Embrace Eventual Consistency..

Databases + CQRS.

Utilize Events to the UI

Events as the Cornerstone of DR.

Use Vendor Agnostic Standard API

or Wireline Protocols for Events..

Overcoming the Barriers Between You and Event-Driven Microservices

Conclusion...

Event Streaming Solutions Compared

https://solace.com/blog/comparing-event-streaming-platforms-and-tech-for-event-driven-architecture/

Key Questions for Event Driven Solutions

- What events already exist in a system? How can I subscribe to various events?

- Who created this or that event? And who can tell me more about it?

- What are my organization’s best practices and conventions for event stream definitions?

- What topic structure should I use to describe the events my application will produce?

- How is this event being used? By whom? By what applications? How often?

- Is the change I want to make to my application or event backward compatible?

- How can I control access to this or that event stream?

- Do capabilities include: replay? recovery? resiliency? traceability? dynamic configuration, testing?

Compare Event Mesh and Service Mesh Concepts - Solace perspective

https://solace.com/resources/event-mesh/wp-download-comparing-and-contrasting-service-mesh-and-event-mesh

Most modern microservices rely on both synchronous and asynchronous interactions, so for most companies it makes sense to deploy both a service mesh and an event mesh. From an infrastructure point-of-view, both can operate in the world of cloud virtualization and Kubernetes, but the event mesh can also connect event nodes that are cloud-native (with or without Kubernetes), on-premises, and even bare metal. In this way, an event mesh expands the reach of connected microservices and gives developers more deployment options. Below is a simple example where Microservice A, driven by synchronous interactions, can inform other microservices of an event having occurred (e.g., “account opened”) by sending an event to an event broker. Other microservices (C, D and E) can perform actions as a result of this event. In general, it’s good design practice to use synchronous interactions when you need to and make as many interactions as possible asynchronous

Potential Value Opportunities

Compare Pulsar, Solace & Artemis for Event Mesh and Streaming Apps

detail comparison on popularity, features, benefits, challenges, case studis of apache pulsar, solace and apache artemis for distributed event mesh and streaming applications?

Conceptual Value Chain Services

multiple logical domains exist on a logical value chain

parties can be authorized to participate by role

distributed network work flows with distribution event subscriptions with a large set of service capabilities

see VCE > Value Chain Economies: micro economies for value-chain communities ( VCC )#FSN-FinancialServicesNetworkisaconceptualFinancialMarketInfrastructure(FMI) for more details

Potential Challenges

Candidate Solutions

https://sourceforge.net/directory/investment-management/mac/

QuickFIX/J is an 100% Java implementation of the popular QuickFIX open source FIX protocol engine. QuickFIX/J features include support for FIX protocol versions 4.0 through 4.4 and 5.0/FIXT1.1 (

www.fixprotocol.org). Please note that the SourceForge SVN repo is read-only. Current repo can be found here:

https://github.com/quickfix-jProgrammers Guide

https://ref.onixs.biz/java-fix-engine-guide/programming-guide.html#:~:text=Resources-,Introduction,enable%20applications%20written%20in%20Java.

Onix Solutions Java FIX Engine is a simple fully Java compliant tool that will FIX-enable applications written in Java.

The Engine provides the following services:

- manages a network connection

- manages the session layer (for the delivery of application messages)

- manages the application layer (defines business related data content)

- creates (outgoing) messages

- parses (incoming) messages

- validates messages

- persists messages (the ability to log data to a flat file)

- session recovery in accordance with the FIX state model

import biz.onixs.fix.engine.Engine;

public class SimpleEngine {

public static void main(String[] args) {

try {

final Engine engine = Engine.init();

engine.shutdown();

} catch (Exception ex)

System.out.println("Exception: " + ex);

}

}

}

Message FormatThe general format of a FIX message is a stream of <tag>=<value> fields with a field delimiter (<SOH>) between fields in the stream (so-called "tag-value FIX format").

Step-by-step guide for Example

sample code block

Recommended Next Steps

Related articles